We’ve recently switched our Rails applications to run in a new Docker powered infrastructure with 1 minute deploys, per minute database backups and whole bunch of other useful features.

Old Infrastructure

Deploying sites to, managing and maintaining the infrastructure that we use to host static websites we wrote 20 years ago, run web applications we built in ASP Classic 15 years ago alongside ASP.Net projects and Rails projects we created yesterday can become a handful at scale. We have to ensure our infrastructure is reliable and easy to deploy to.

We started building new projects in Rails about 3 years ago; after looking at all the hosting options available we decided to go down the DIY route. We primarily went DIY because of the high prices involved in PaaS (Platform as a Service) solutions such as Heroku, OpenShift etc. Clients wouldn’t be willing to pay over the norm to host a run of the mill site just because we made the decision to use Rails. Some may say that's the penalty you pay for using Rails to build basic sites.

With months of research and experimentation we built ourselves a Rails box on DigitalOcean, Nginx + Postgres coupled with Unicorn. We used Mina for deployment with a bit of scripting to create the database and get the sites live. Mina is ultra fast so our deployments only took around 30 seconds. We didn’t want to lose too much of this speed going forward.

With months of research and experimentation we built ourselves a Rails box on DigitalOcean, Nginx + Postgres coupled with Unicorn. We used Mina for deployment with a bit of scripting to create the database and get the sites live. Mina is ultra fast so our deployments only took around 30 seconds. We didn’t want to lose too much of this speed going forward.

We monitored resource usage via NewRelic to determine when new boxes were needed and just cloned our original snapshot to bring the box up. It worked well with the small number of apps we had but it wasn’t a perfect setup for a number of reasons:

- Every time we added a new box we added to our maintenance workload since we had no overarching control of all boxes.

- Sites DNS records we’re being pointed directly at individual boxes, leaving us no room in-case of individual hardware failure or rapidly reassigning elastic IP’s.

- If a site became popular we had no room to scale up the workers to cope with demand without moving them onto a different box.

- Deployment scripts had to be updated for each site.

We thought about building an overarching management solution that would provision boxes using Chef and oversee the deployments itself by taking most of the Mina code to essentially create a Chef/Nginx/Unicorn/Mina GUI. The time needed to build the solution, the thought of building an interface in front of so many applications and the fact it would be highly tied to running just Rails applications eventually put us off this idea (Others have produced similar paid and unpaid solutions, see Intercityup and Cloud66).

We wanted a solution that would tick the following boxes:

- Lessen our workload with regard to machines + maintenance

- Help us create a more reliable infrastructure

- Leave us free to run more than just Rails applications

- Reduce our reliance on scripts to get basic tasks done

- Similar cost to our current solution

- Allow us to continually add new projects without headaches

The Solution

Docker (+ DockerCloud) is that solution for us. We’ve now been running (nearly) all of our Rails applications from our new Docker infrastructure for nearly a month.

Our Rails applications are packaged as Docker ‘Images’ ready to be run as ‘Services’ on our own private platform based on AWS EC2 hardware. The clever dockercloud/haproxy image helps to route requests by hostname to the correct Service via links + a private software network established between ‘Services’. We’re not using the load balancing capabilities of HAProxy for any clients right now so currently its purpose is more of a router, terminating web requests and proxying them to the correct ‘Service’ based on hostname.

We developed a base image based on the Ruby:Slim image, we’ve pre-installed a number of key large gems (Nokogiri, Libv8 etc), Nginx and some dependencies (ImageMagick, Postgres-dev, etc). Our Rails applications now all have a small Dockerfile to describe what's needed to get them ready to run and a Procfile to describe how to run them.

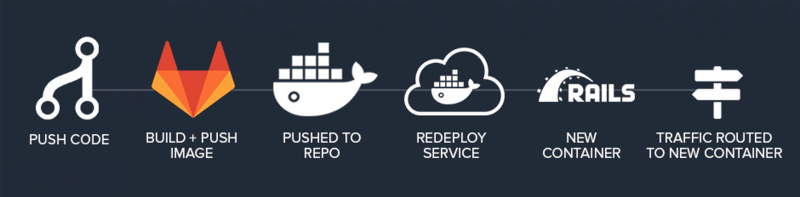

Deploying a new site onto the infrastructure is a case of creating the Dockerfile+Procfile and a .gitlab-ci.yml file. We’re using GitLab CI to both build and push our images. Our GitLab box has a great internet connection which helps to speed up the ‘bundle install’ and ‘docker push’ processes versus our office internet connection. We only deploy on master, meaning we now work out a ‘development’ branch and merge into master when we’re ready for code to go live.

Since Docker (and the Hub) work in a layer cache method it means once the initial build/push has taken place (which takes around 5-6 minutes) we’re talking about changes to the sites taking around 50 seconds to be built/pushed. This process could be sped up by upping the hardware our GitLab instance runs on. Given the hands off approach we’re quite happy with the deployment speed.

Since Docker (and the Hub) work in a layer cache method it means once the initial build/push has taken place (which takes around 5-6 minutes) we’re talking about changes to the sites taking around 50 seconds to be built/pushed. This process could be sped up by upping the hardware our GitLab instance runs on. Given the hands off approach we’re quite happy with the deployment speed.

Our services on DockerCloud are set to automatically redeploy upon detecting an updated image. Since the images are ready to run (bundle install, asset:precompile have been run) the redeploy takes seconds. On average changes to our applications take just 1 minute to be in production with zero downtime.

Services will automatically deploy themselves to the emptiest node. Service discovery via ‘links’ mean it doesn’t matter which node a Service is deployed to the load balancer knows where the Service is running and can route the request over a software network to the Service. The upshot of service discovery means we could even have applications distributed over multiple cloud providers.

Backing services are slightly more complex than websites so we’re not running PostgreSQL as a service on our infrastructure just yet. We’ve employed the use of Amazon RDS to provide our applications with a reliable database server to use. RDS comes complete with great backups, automated updates and failover capabilities.

We’re using Route53 in front of an elastic IP for our DNS, this provides us with the ability to cope in the event of the failure of the hardware hosting the load balancer, another box ticked!

Conclusion

Deploying sites from scratch no longer requires the deep knowledge of Unicorn, Nginx and Mina that our old solution had. The costing of our new solution per site is only slightly higher than the per site cost of our DIY solution. The time we’ve gained from not having to provision, update and rescue boxes is now being better spent creating the applications themselves.

With everyone announcing Docker support, including Microsoft with ASP.NET 5 we’re excited to be in a position to take advantage of this when delivering solutions for our clients. We can now easily and reliably deploy anything that we can expose to our load balancer over HTTP, thats Node.js, PHP, ASP.NET and lots more. We’ve essentially got a scalable hosting platform for any web application.